My Raspberry Pi is at the heart of all my homelab shenanigans, from nginx serving as reverse proxy for some of my services hosted on my other servers, to updating my domains, cloudflared tunnel, VPN, and a boatload of other crap… long story short, it play an extremely crucial role in my setup.

I’m not proud to say this, but for how important of a role that my Raspberry Pi plays, it has among one of the jankiest setups of all time. It was running with a Samsung charger that was meant for the Galaxy S4 , circa mid 2013. Its boot-up drive used to be an old Kingston USB3.0 thumb drive that literally died three weeks after deploying it(could no longer boot up cuz it got corrupted lmao). Then I moved on to using an SSD connected through an old portable hard drive SATA to USB converter from like my very first USB3 portable hard drive(cuz its original drive also died). However, that SSD is from my personal PC that I had used for a good five years.(before I finally upgraded to Ryzen and got myself an NVME SSD) To be fair, that drive didn’t get much use as I used for dual booting into Hackintosh.

Yet, for how terrible this setup is, it actually worked fine. I deployed it a few weeks before I left Malaysia, and it has served me 24/7 for nearly a year with no issues at all.

Sh*t had hit the fan.

During my summer break, I returned home from Singapore and didn’t have much to do. I spent a lot more time doing some more shenanigans on my Raspberry Pi. However, one day, as I was video calling my friend, I wanted to use OME to share my screen because we were talking about Lightroom and Flor grading. I wanted the full-quality experience instead of whatever the crap discord gives you.

Anyways, it wasn’t working. So I thought, “Oh perhaps the container is stopped or the SSL certificate expired or something.” But then, I couldn’t even access my Raspberry Pi, my Portainer wouldn’t load, nor could I SSH into my Raspberry Pi. This was the first time that it happened, so I didn’t really think much of it. But thankfully, this happened when I was at home. I went downstairs and looked at the Raspberry Pi; it seemed fine to be honest. So I thought, perhaps the Raspberry Pi had locked up for some reason, and I proceeded to just power cycle it.

And well, everything worked again, and nothing seemed out of the ordinary.

And then it kept happening…

A few days later, I wanted to work on my Talkyard project, and I couldn’t. Once again, no response in SSH. I looked at it, and the lights are still on and blinking occasionally. However, I see a solid light at the ethernet port. I pulled up my router page and checked for devices connected to it. Oddly, my Raspberry Pi was not there. Then I thought, is the Samsung charger finally giving up the ghost? Is it finally dying on me now? Because Raspberry Pis are known to be quite finicky about the power supply, and knowing the age of the power supply, it was a fairly reasonable suspicion.

So I quickly swapped out the power supply with an old(facepalm!) Apple 10W charger that I had. I mean, to be fair, Apple chargers are known to be among the best for stability and reliability. And yet, it kept happening, which got me REAL concerned because this meant that the drive might potentially be corrupted, causing errors leading to the Raspberry Pi locking up. I googled and tried every way possible to do a filesystem check and whatnot, hoping that that would fix it. I also tried installing all the updates I could possibly do, from doing basic apt update to installing unreleased firmware through rpi-update.

Still, nada. Zero, zip, zilch, nada. It kept dying after a while, and it got me really frustrated. It was affecting some of my services because of how important of a role it plays.

Eventually, I used all ten of my brain cells to figure out a better way to diagnose it, and tried to see how can I monitor it more closely. That’s when I finally wisen up and went to look at the system logs. I mean, that should’ve been my first step, but I’m not that smart ok HAHA.

sudo nano /var/log/syslogHere’s the command to do it, FYI. To my ABSOLUTE horror, the log file was LONG, like super duper long. It had 96 thousand lines of logs. After I scrolled up beyond the initial system logs from the boot up after I power-cycled it, there were a crap ton of error messages.

There were a bunch of logs from the system and kernel that had something to do with docker network interface, and the other one is cloudflared complaining that it couldn’t connect to the server.

Jun 26 17:29:07 raspberrypi kernel: [178503.873236] docker0: port 2(vethd54ece1) entered blocking state

Jun 26 17:29:07 raspberrypi kernel: [178503.873258] docker0: port 2(vethd54ece1) entered disabled state

Jun 26 17:29:07 raspberrypi kernel: [178503.873684] device vethd54ece1 entered promiscuous mode

Jun 26 17:29:07 raspberrypi systemd-udevd[9296]: link_config: autonegotiation is unset or enabled, the speed and duplex are not writable.

Jun 26 17:29:07 raspberrypi systemd-udevd[9296]: Using default interface naming scheme 'v240'.

Jun 26 17:29:07 raspberrypi systemd-udevd[9296]: Could not generate persistent MAC address for vethd7461f6: No such file or directory

Jun 26 17:29:07 raspberrypi systemd-udevd[9297]: link_config: autonegotiation is unset or enabled, the speed and duplex are not writable.

Jun 26 17:29:07 raspberrypi systemd-udevd[9297]: Using default interface naming scheme 'v240'.

Jun 26 17:29:07 raspberrypi systemd-udevd[9297]: Could not generate persistent MAC address for vethd54ece1: No such file or directory

Jun 26 17:29:08 raspberrypi containerd[718]: time="2022-06-26T17:29:08.180696748+01:00" level=info msg="loading plugin \"io.containerd.event.v1.publisher\"..." runtime=io.containerd.runc.v2 type=io.containerd.event.v1

Jun 26 17:29:08 raspberrypi containerd[718]: time="2022-06-26T17:29:08.180954763+01:00" level=info msg="loading plugin \"io.containerd.internal.v1.shutdown\"..." runtime=io.containerd.runc.v2 type=io.containerd.internal.v1

Jun 26 17:29:08 raspberrypi containerd[718]: time="2022-06-26T17:29:08.181011355+01:00" level=info msg="loading plugin \"io.containerd.ttrpc.v1.task\"..." runtime=io.containerd.runc.v2 type=io.containerd.ttrpc.v1

Jun 26 17:29:08 raspberrypi containerd[718]: time="2022-06-26T17:29:08.181471016+01:00" level=info msg="starting signal loop" namespace=moby path=/run/containerd/io.containerd.runtime.v2.task/moby/34feffb9f6861545ff5df0aa2db705bbd6b4bb2e781efc70988a0fdd3f4c8ebd pid=9358 runtime=io.containerd.runc.v2Jun 26 17:29:21 raspberrypi cloudflared[703]: 2022-06-26T16:29:21Z ERR Unable to establish connection with Cloudflare edge error="DialContext error: dial tcp 198.41.200.193:7844: connect: network is unreachable" connIndex=0

Jun 26 17:29:21 raspberrypi cloudflared[703]: 2022-06-26T16:29:21Z ERR Serve tunnel error error="DialContext error: dial tcp 198.41.200.193:7844: connect: network is unreachable" connIndex=0

Jun 26 17:29:21 raspberrypi cloudflared[703]: 2022-06-26T16:29:21Z INF Retrying connection in up to 32s seconds connIndex=0

Jun 26 17:29:23 raspberrypi cloudflared[703]: 2022-06-26T16:29:23Z ERR Failed to create new quic connection error="failed to dial to edge: INTERNAL_ERROR: write udp [::]:58679->198.41.192.227:7844: sendto: network is unreachable" connIndex=1

Jun 26 17:29:23 raspberrypi cloudflared[703]: 2022-06-26T16:29:23Z ERR Serve tunnel error error="failed to dial to edge: INTERNAL_ERROR: write udp [::]:58679->198.41.192.227:7844: sendto: network is unreachable" connIndex=1

Jun 26 17:29:23 raspberrypi cloudflared[703]: 2022-06-26T16:29:23Z INF Retrying connection in up to 32s seconds connIndex=1

Jun 26 17:29:26 raspberrypi cloudflared[703]: 2022-06-26T16:29:26Z INF Switching to fallback protocol http2 connIndex=2

Jun 26 17:29:26 raspberrypi cloudflared[703]: 2022-06-26T16:29:26Z ERR Unable to establish connection with Cloudflare edge error="DialContext error: dial tcp 198.41.200.113:7844: connect: network is unreachable" connIndex=2

Jun 26 17:29:26 raspberrypi cloudflared[703]: 2022-06-26T16:29:26Z ERR Serve tunnel error error="DialContext error: dial tcp 198.41.200.113:7844: connect: network is unreachable" connIndex=2

Jun 26 17:29:26 raspberrypi cloudflared[703]: 2022-06-26T16:29:26Z INF Retrying connection in up to 1s seconds connIndex=2

Jun 26 17:29:27 raspberrypi cloudflared[703]: 2022-06-26T16:29:27Z INF Switching to fallback protocol http2 connIndex=1

Jun 26 17:29:27 raspberrypi cloudflared[703]: 2022-06-26T16:29:27Z ERR Unable to establish connection with Cloudflare edge error="DialContext error: dial tcp 198.41.192.227:7844: connect: network is unreachable" connIndex=1

Jun 26 17:29:27 raspberrypi cloudflared[703]: 2022-06-26T16:29:27Z ERR Serve tunnel error error="DialContext error: dial tcp 198.41.192.227:7844: connect: network is unreachable" connIndex=1

Jun 26 17:29:27 raspberrypi cloudflared[703]: 2022-06-26T16:29:27Z INF Retrying connection in up to 1s seconds connIndex=1

Jun 26 17:29:27 raspberrypi cloudflared[703]: 2022-06-26T16:29:27Z ERR Unable to establish connection with Cloudflare edge error="DialContext error: dial tcp 198.41.200.113:7844: connect: network is unreachable" connIndex=2

Jun 26 17:29:27 raspberrypi cloudflared[703]: 2022-06-26T16:29:27Z ERR Serve tunnel error error="DialContext error: dial tcp 198.41.200.113:7844: connect: network is unreachable" connIndex=2

Jun 26 17:29:27 raspberrypi cloudflared[703]: 2022-06-26T16:29:27Z INF Retrying connection in up to 4s seconds connIndex=2

Jun 26 17:29:27 raspberrypi cloudflared[703]: 2022-06-26T16:29:27Z ERR Unable to establish connection with Cloudflare edge error="DialContext error: dial tcp 198.41.192.227:7844: connect: network is unreachable" connIndex=1

Jun 26 17:29:27 raspberrypi cloudflared[703]: 2022-06-26T16:29:27Z ERR Serve tunnel error error="DialContext error: dial tcp 198.41.192.227:7844: connect: network is unreachable" connIndex=1

Jun 26 17:29:27 raspberrypi cloudflared[703]: 2022-06-26T16:29:27Z INF Retrying connection in up to 4s seconds connIndex=1

Jun 26 17:29:28 raspberrypi cloudflared[703]: 2022-06-26T16:29:28Z ERR Failed to create new quic connection error="failed to dial to edge: INTERNAL_ERROR: write udp [::]:48699->198.41.200.13:7844: sendto: network is unreachable" connIndex=0

Jun 26 17:29:28 raspberrypi cloudflared[703]: 2022-06-26T16:29:28Z ERR Serve tunnel error error="failed to dial to edge: INTERNAL_ERROR: write udp [::]:48699->198.41.200.13:7844: sendto: network is unreachable" connIndex=0

Jun 26 17:29:28 raspberrypi cloudflared[703]: 2022-06-26T16:29:28Z INF Retrying connection in up to 2s seconds connIndex=0But the more I scroll up and check; it doesn’t seem like the Raspberry Pi had locked up. I kept seeing days worth of logs, right up till I power-cycled it. However, I saw this peculiar error, it looks to have lost its IP allocation.

Jun 25 15:54:26 raspberrypi avahi-daemon[440]: Withdrawing address record for REDACTED on eth0.

Jun 25 15:54:26 raspberrypi avahi-daemon[440]: Leaving mDNS multicast group on interface eth0.IPv4 with address REDACTED.

Jun 25 15:54:26 raspberrypi avahi-daemon[440]: Interface eth0.IPv4 no longer relevant for mDNS.It didn’t really make sense, because I have the Raspberry Pi configured to have a static IP on the router side. Initially, my suspicion was on the fact that I have my router restart every three days, and perhaps after those restarts, the Raspberry Pi couldn’t negotiate a new IP. Still, as I will explain later on, that wasn’t the case, and it has always been like this since the beginning of dawn; no reason for it to fail now. I even updated my router’s firmware just to be extra sure, and still, no dice.

Whenever you google any issues regarding RPi network stability on Google, the first response that people always give you is to check your power supply, which I don’t really have a way of doing. But I tried overclocking the Raspberry Pi and stress-tested it just to verify that the power supply is able to provide the needed power. This command confirmed that there were no under-voltage issues.

vcgencmd get_throttled

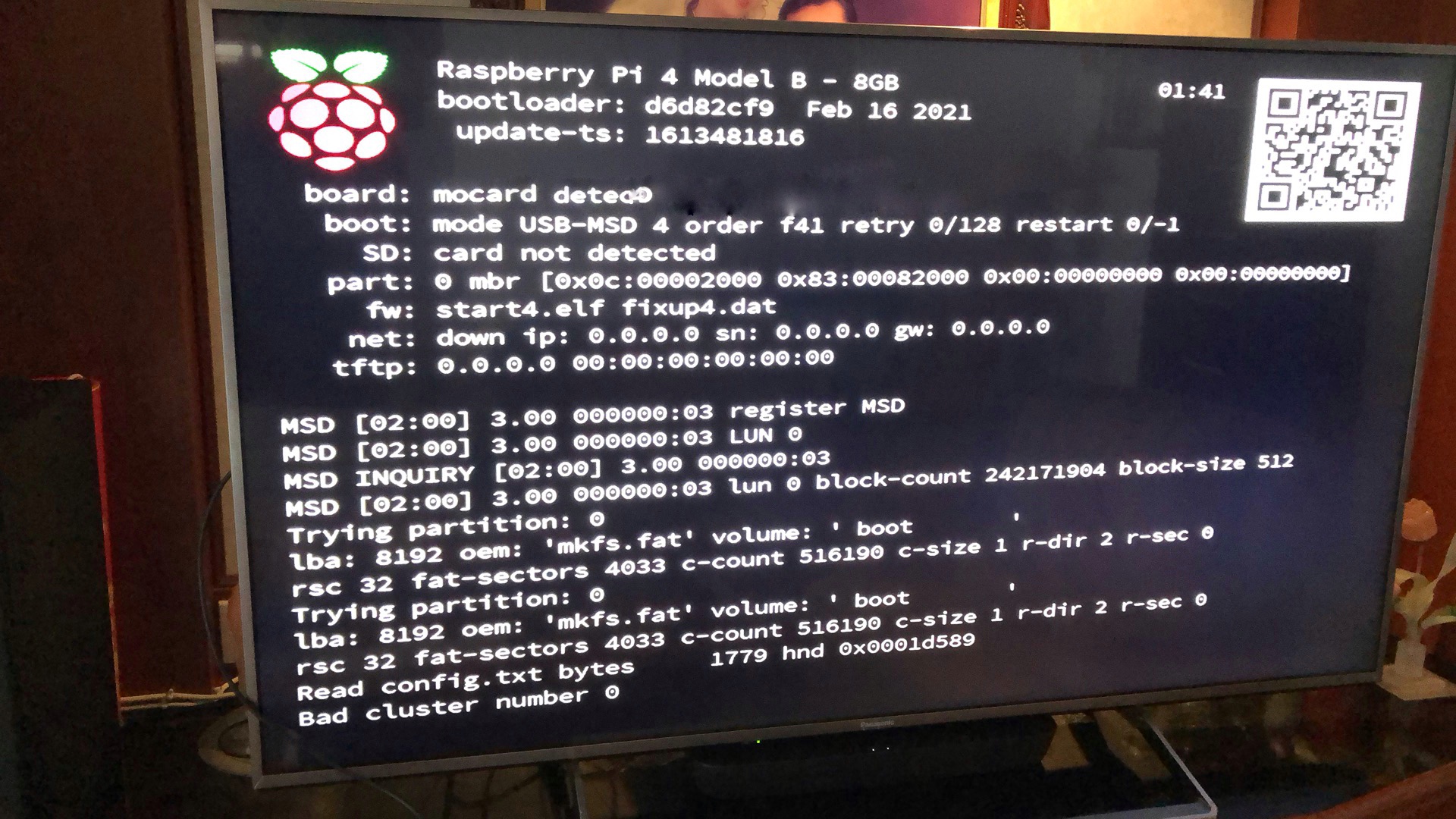

# throttled=0x0At this point, I was kind of at a loss and couldn’t really think of what the culprit was. I played with the ethernet power savings features and whatnot, still didn’t work. I eventually gave up and ordered the official power supply from element14(which took forever due to some complications). Even after swapping that in, nothing’s changed. I even tried connecting the RPi to a display to see if anything shows up, but as expected, nothing at all. At which, during this point in time, I was at a complete loss.

Pulseway to the rescue!

In case you didn’t know, Pulseway is a monitoring software, that I use because LinusTechTips advertised the snot out of it. I had Pulseway running on two servers already, so I couldn’t really install it on my RPi. This is due to the limitations on the free account, but it doesn’t really matter anyways because Pulseway has the ability for you to periodically ping any address you want, and it will alert you if the ping failed after several tries or after a certain period of time. I had that set up for my Raspberry Pi, and I mostly use that alerts to let me know if the RPi is down so I know to restart it.

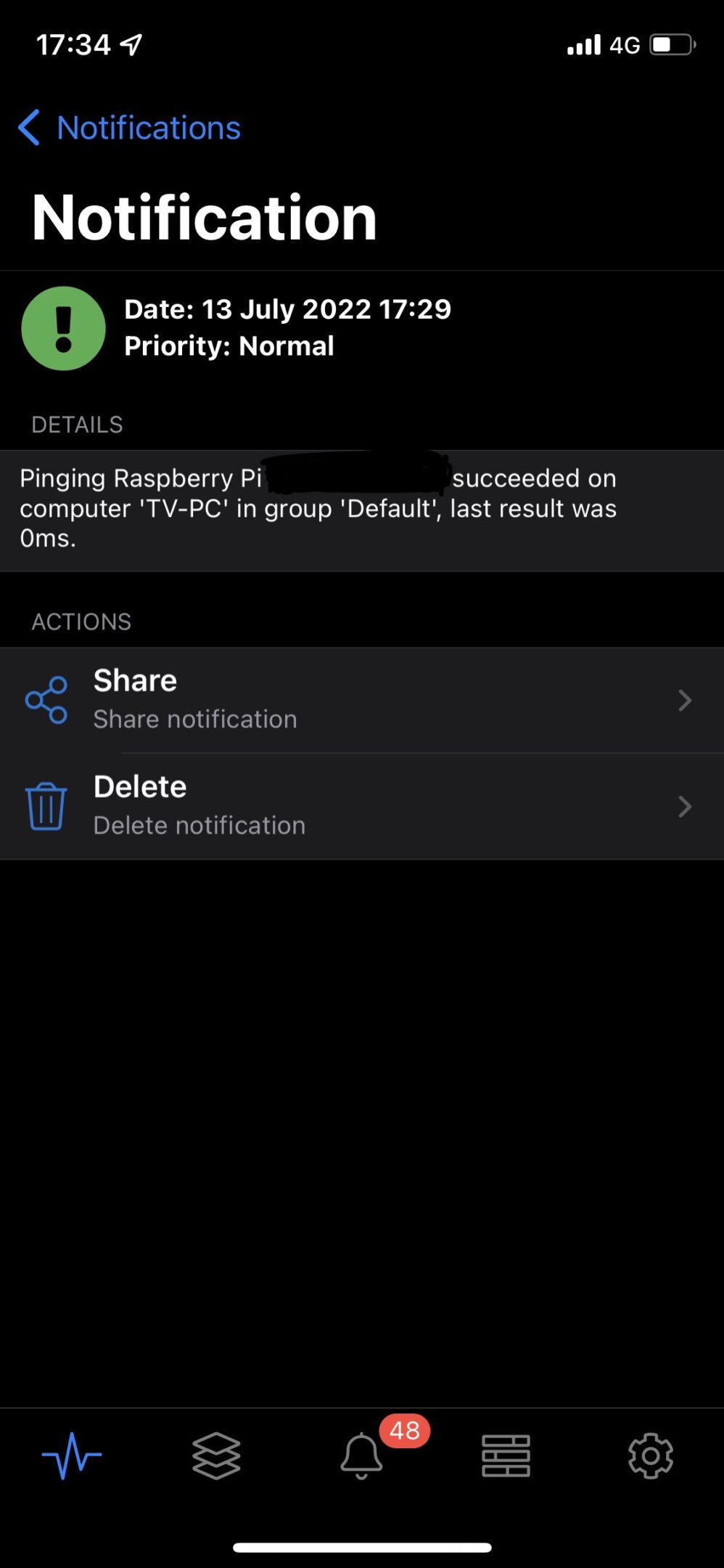

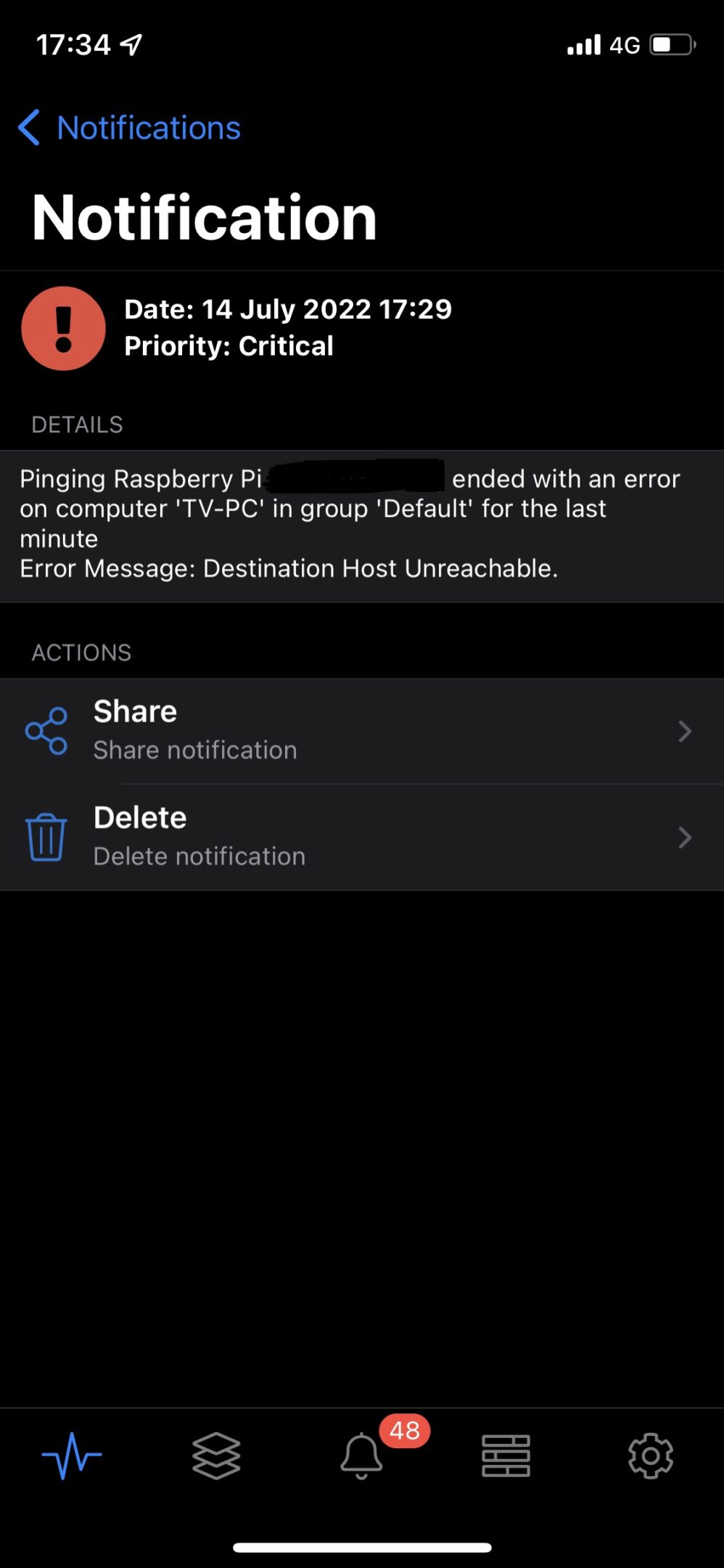

At first, I wanted to see if the Raspberry Pi fails during the night when the router restarts at 3AM, but that’s not the case. At the time, whenever I receive an alert for ping failure I would just restart it, and once it back up Pulseway would let me know that the pings are successful again.

However, I noticed something peculiar about the time frame between the two notifications, they are exactly 24 hours apart, which there is no way in hell that its purely coincidental. Then it struck me, that just happens to be the router DHCP lease time. I dug around the boot up logs for my Raspberry Pi, and would you look at that, 86400seconds(24hours). Here, I can confidently conclude that the Raspberry Pi is failing to renew its DHCP, but why?

Jul 13 17:17:15 raspberrypi dhcpcd[442]: eth0: leased REDACTED for 86400 seconds

Jul 13 17:17:15 raspberrypi avahi-daemon[430]: Joining mDNS multicast group on interface eth0.IPv4 with address REDACTED.

Jul 13 17:17:15 raspberrypi avahi-daemon[430]: New relevant interface eth0.IPv4 for mDNS.

Jul 13 17:17:15 raspberrypi dhcpcd[442]: eth0: adding route to REDACTED/24

Jul 13 17:17:15 raspberrypi avahi-daemon[430]: Registering new address record for REDACTED on eth0.IPv4.

Jul 13 17:17:15 raspberrypi dhcpcd[442]: eth0: adding default route via REDACTED

Jul 13 17:17:15 raspberrypi dhcpcd[442]: forked to background, child pid 705I continued digging around the logs files, this time focusing on logs from DHCPCD, and look what I found.

Jul 13 17:17:24 raspberrypi dhcpcd[705]: veth23ec073: waiting for carrier

Jul 13 17:17:24 raspberrypi systemd-udevd[1706]: link_config: autonegotiation is unset or enabled, the speed and duplex are not writable.

Jul 13 17:17:24 raspberrypi dhcpcd[705]: route socket overflowed - learning interface state

Jul 13 17:17:24 raspberrypi systemd-udevd[1706]: Could not generate persistent MAC address for veth0a73baf: No such file or directory

Jul 13 17:17:24 raspberrypi systemd-udevd[1743]: link_config: autonegotiation is unset or enabled, the speed and duplex are not writable.

Jul 13 17:17:24 raspberrypi kernel: [ 28.326079] IPv6: ADDRCONF(NETDEV_CHANGE): vetha236018: link becomes ready

Jul 13 17:17:24 raspberrypi kernel: [ 28.326167] br-b57ee8fef37b: port 1(vetha236018) entered blocking state

Jul 13 17:17:24 raspberrypi kernel: [ 28.326174] br-b57ee8fef37b: port 1(vetha236018) entered forwarding state

Jul 13 17:17:24 raspberrypi systemd-udevd[1743]: Could not generate persistent MAC address for veth79ad642: No such file or directory

Jul 13 17:17:24 raspberrypi kernel: [ 28.334633] docker0: port 6(veth79ad642) entered blocking state

Jul 13 17:17:24 raspberrypi kernel: [ 28.334646] docker0: port 6(veth79ad642) entered disabled state

Jul 13 17:17:25 raspberrypi kernel: [ 28.339162] device veth79ad642 entered promiscuous mode

Jul 13 17:17:25 raspberrypi containerd[724]: time="2022-07-13T17:17:24.979232300+08:00" level=info msg="starting signal loop" namespace=moby path=/run/containerd/io.containerd.runtime.v2.task/moby/0ab3f77fc388f1510bec223386fbf73e9ded77117347ee00b7a2e4b8d26bbf94 pid=1878 runtime=io.containerd.runc.v2

Jul 13 17:17:25 raspberrypi kernel: [ 28.349207] docker0: port 6(veth79ad642) entered blocking state

Jul 13 17:17:25 raspberrypi kernel: [ 28.349220] docker0: port 6(veth79ad642) entered forwarding stateLine 3 is the important one, and a quick google will lead you here: https://github.com/raspberrypi/linux/issues/4092

I won’t try to explain much, but long story short, DHCPCD got overwhelmed by the amount of network interface that I had. This was likely due to the fact that I had too many docker containers. While the Raspberry Pi has a static IP assigned through the router, to the RPi, it still has to do the standard DHCP IP negotiations. Since DHCPCD died, no DHCP negotiations are being done to renew the lease or to negotiate an IP. My RPi was essentially just sitting ducks. But luckily, the solution is fairly simple.

#Fastest workaround:

sudo nano /etc/dhcpcd.conf

$Insert the following line at the end:

denyinterfaces veth*And well, it worked! What this does is tell DHCPCD to ignore the interfaces which starts with veth, which is what docker uses for its virtual networks. This stops DHCPCD from crashing and prevents the issue of renewing the lease. I even doubled down by assigning a static IP directly in the DHCPCD config file so I don’t ever have to worry about DHCP.

That’s all I guess

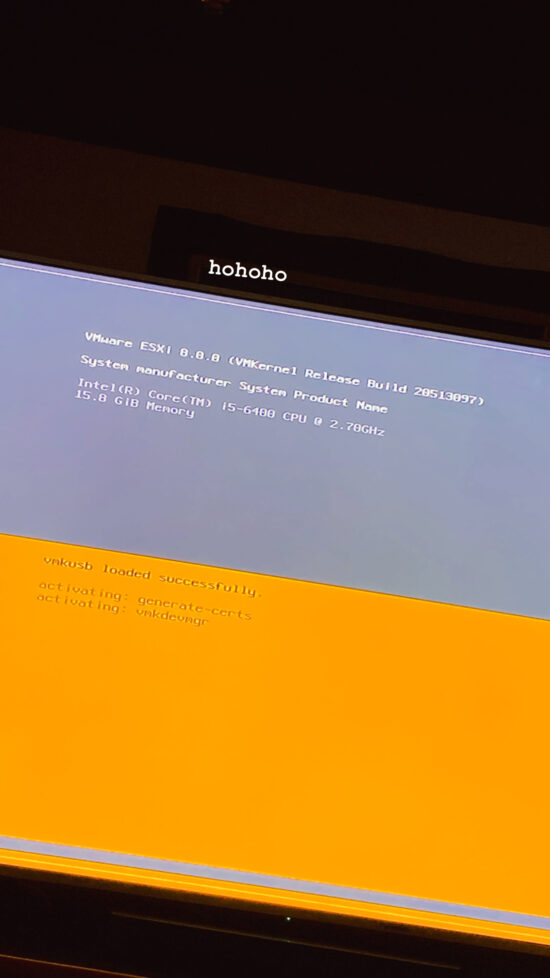

I think the reason this happened in the first place was because I was working on the Talkyard project at the time, and I was building and removing a lot of docker containers, not to mention all the different stuff from other miscellaneous projects as well. Anyways, the problem is solved now, and thanks to this, my RPi setup is now less janky, thanks to the new power supply. I also got a UPS for the RPi and router so my set up stays up even during blackouts. The end :)